Spiders, crawlers, frogs, oh my! When it comes to your site’s health, the results can sometimes spook you.

You may know that you should regularly crawl a specific site domain to check for any unwanted anomalies like duplication and 300 or 400-level status codes—but are you paying attention to your website’s XML sitemap?

The XML sitemap should be combed through periodically to ensure search engines are receiving all of the correct signals. If not, you could be unknowingly hurting your rankings and negatively impacting user experience through missed response error codes or inaccurate noindex tags.

With the help of tools like Screaming Frog and Deep Crawl, it’s time to make these crawls a regular habit. That way, you won’t be frightened by what’s hiding in your sitemap.

What exactly is an XML Sitemap?

An XML sitemap provides search engines with a list of all pages on your site, even those that might not be found through their traditional crawling means.

The sitemap allows crawlers to understand the content better, which leads to a more comprehensive crawl. From there, thorough sitemap crawls make it easy to quickly maneuver through all pages seen by search engines to determine if there are any outstanding issues.

Still aren’t convinced a sitemap crawl is worth your time? These 3 reasons should change your mind.

1) It’s easy to clean up pesky response codes

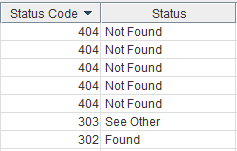

A sitemap crawl helps you to find and organize each one of your pages’ response codes. While there are a plethora of different HTTP response codes, there are a few common ones you should steer clear of.

Insider tip: Search engines are more likely to trust sitemaps that are free of error codes. Through an XML sitemap crawl, you might find pages that are returning codes you don’t want—and weren’t even aware of.

From there, your account analyst can modify the pages’ codes you need to change.

2) Canonicals are king

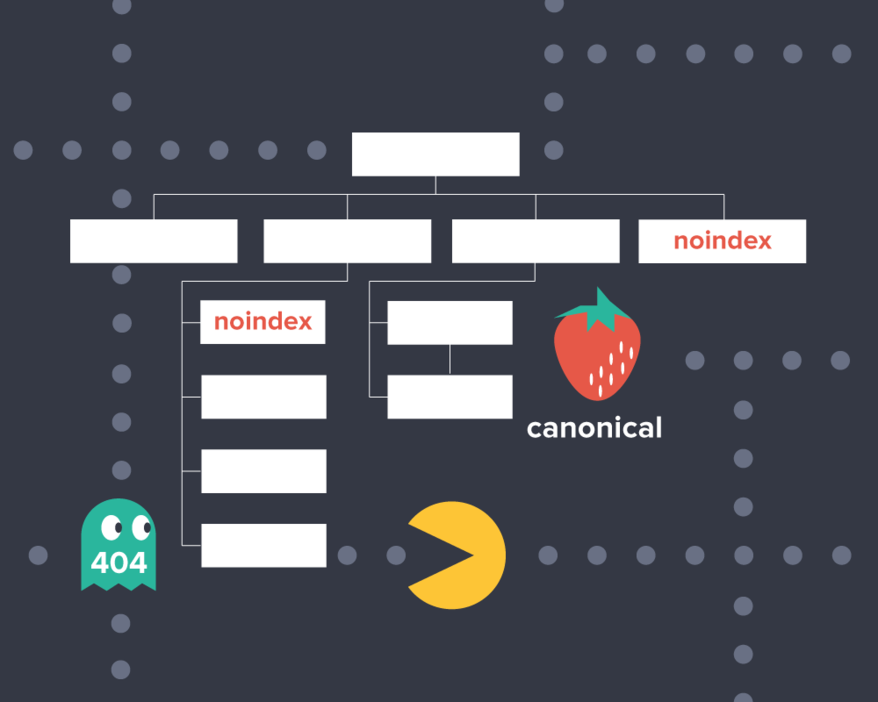

Sitemap crawls also give you the ability to see if and where specific URLs are canonicaling to. A canonical URL tag tells search engines that multiple pages should be seen as one, without actually redirecting the user to the new page.

![]()

While they are helpful for both web developers and search engines, you can run into problems if they are canonicaling to an incorrect or completely different URL.

If this is the case, search engines won’t know which URL is preferred, and they will end up ignoring them all. Sitemap crawls can pinpoint which URLs have canonicals and where they are pointing to without requiring someone to spot check each page one by one.

3) Noindex, no problem...?

Noindex tags let search engines know that you do not want specific pages to be indexed in the SERP. You want to use the noindex tag sparingly. They could apply to employee-only pages or thank you pages after a customer purchases a product or service.

Unfortunately, there are times when you’re completely unaware that a page is being noindexed. If an important page has a noindex tag on it, it won’t get traffic—and that’s a big deal.

Wondering how to avoid this problem? The handy dandy sitemap crawl comes to the rescue.

While you’re busy poking around in your newly completed crawl, you can easily filter and scan all pages appearing to not be indexed to make sure all of them belong there, and take note of those that need to be modified. Problem solved.

--

There you have it! Three (out of many!) reasons why you should be crawling your website’s sitemap. Any questions? Tweet us @Perfect_Search.