When it comes to SEO, it seems that the entire goal is to increase traffic and get your website seen as much as possible. Climbing to the top of the results page can sometimes seem like the solitary goal of all SEO analysts. However, it gets a little more nuanced than that.

In fact, there comes a time when SEO analysts are trying to do the very opposite. Sometimes, it’s necessary to keep certain pages off a search engine results page (SERP) and hidden away in your domain. Is your world shattered yet? Read on.

Why block a page?

Isn’t SEO all about optimizing pages so that they’re found? Not always. In fact, optimization can also mean hiding pages when necessary. These instances include pages that show duplicate content, a thank you page after a purchase, resource pages that contain images or text utilized during website development, and even your sitemap.

Since these pages aren’t all that useful in a SERP, you might be thinking, so what? Having as many pages indexed is still beneficial to your domain authority, right?

The old adage that “any press is good press” doesn’t totally apply to search engine results. Google and other search engines have their own guidelines for what they consider to be best practices.

Things like duplicate content can actually make search engines penalize your website. Keeping certain pages from being indexed is a necessary part of maintaining a website’s health.

So, how do you go about keeping a page from being crawled by a search engine? Let’s dive in.

1) Password protection

One of the more secure ways to block URLs from showing up in search results is to password-protect your server directories.

If you have content that is private or confidential and don’t want it open for anyone with the right keywords, you can store your URLs in a directory on your site server and make it only accessible to users with a password.

2) Robots.txt files

Another way to prevent crawlers from indexing your page is by utilizing a robots.txt file. A robots.txt file lets you list the pages on your site that you would like to disallow from search engine crawl bots. Search engines will then ignore these pages when they crawl the entirety of your site.

This method of blocking the indexing of certain webpages is used when you don’t want search engine crawlers to overwhelm your server or waste their time crawling unimportant pages.

It shouldn’t be used for when you want to hide your webpages from the public eye and keep them out of SERPs. That’s because other sites might link to a certain page that you’ve put in a robots.txt anyway, which would get it indexed.

It is also important to point out that robots.txt files are guidelines and cannot enforce crawler behavior. Respectable crawlers like Google will abide by their instructions but other crawlers might not.

To learn more, check out Google’s guide to implementing a robots.txt file.

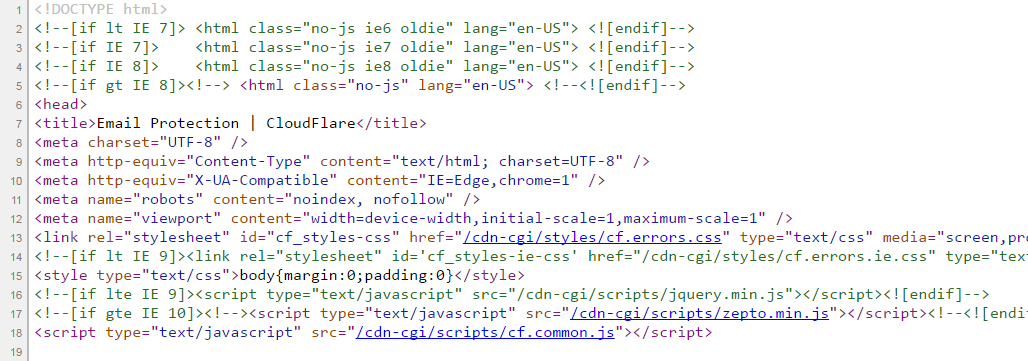

3) Noindex meta tags

If you’re looking for a way to block crawlers more effectively, you can use a noindex meta tag. This can be implemented by placing the tag into the <Head> section of your page. As you can see from the below URL, we have inserted a Noindex Tag on line 11.

This method prevents a page from appearing in search results completely. Even if another site links to your page, it still cannot show up in any SERP from any search engine.

This method is useful for blocking pages like a ‘thank you’ page or a shopping cart page on an e-commerce site. Both these pages are not meant to be landing pages and should only be accessed after multiple clicks when looking at a domain.

Caution: Do not mix

After exploring these methods of blocking a URL, you might think that it would be best to disallow a page in robots.txt and add a noindex tag. After all, better safe than sorry, right?

In theory, this seems like a safe choice. In fact, it has the opposite effect. When a URL has both blocking methods implemented, the robots.txt file will cause the crawler to skip the noindex meta tag.

This will only put the robots.txt file in effect, so your URL will still be able to show up in search results from other site links and from search engine crawlers that ignore the robots.txt directives.

--

Removing pages from the SERP is actually an important part of SEO. Who knew? (Well, we did.) By removing the right pages from the SERP, we’re able to focus on only showing relevant pages in the SERP and keeping them at the top.

If you liked this article, check our posts about identifying duplicate content and how to master internal linking.